The State of Vue.js Report 2025 is now available! Case studies, key trends and community insights.

Beyond text-based exchanges

In the current AI applications and chatbot landscape, user interactions are usually limited to simple, text-based exchanges. Users input commands or questions, and the chatbot responds with the answers. While conversing in natural language is convenient, sometimes it's better to display answers in a more complex UI. The same goes for user actions - instead of writing text, for some tasks, it’s easier to use interactive components. This is where Generative UI comes into play.

Generative UI takes interaction to new heights by allowing the interface to create real-time, custom responses based on user input. This shift is not just about aesthetics; it's about functionality. With Generative UI, a chatbot can now present complex information, such as a product comparison, a chart, table, form, and more. This makes every user’s journey unique. Instead of navigating through a fixed set of menus or forms, users can engage in a natural conversation with the chatbot, which dynamically generates UI components tailored to the context of the interaction.

Simplifying Generative UI integration in chatbots: the POC

Integrating Generative UI into a chatbot from a technical perspective can be quite challenging. Since the inputs and responses from the LLMs are purely text-based, we need a way to instruct the AI to respond in a manner that allows us to display custom UI components. Additionally, our app must be able to understand AI responses in order to render the UI properly. Fortunately, Vercel’s release of AI SDK 3 made this task much more manageable. Using this library, adding Generative UI to a chatbot is reduced to a single configuration that defines all available functions, UI components, etc. Vercel’s SDK uses React Server Components and the UI is streamed from the server similarly to text responses, enhancing the app's performance.

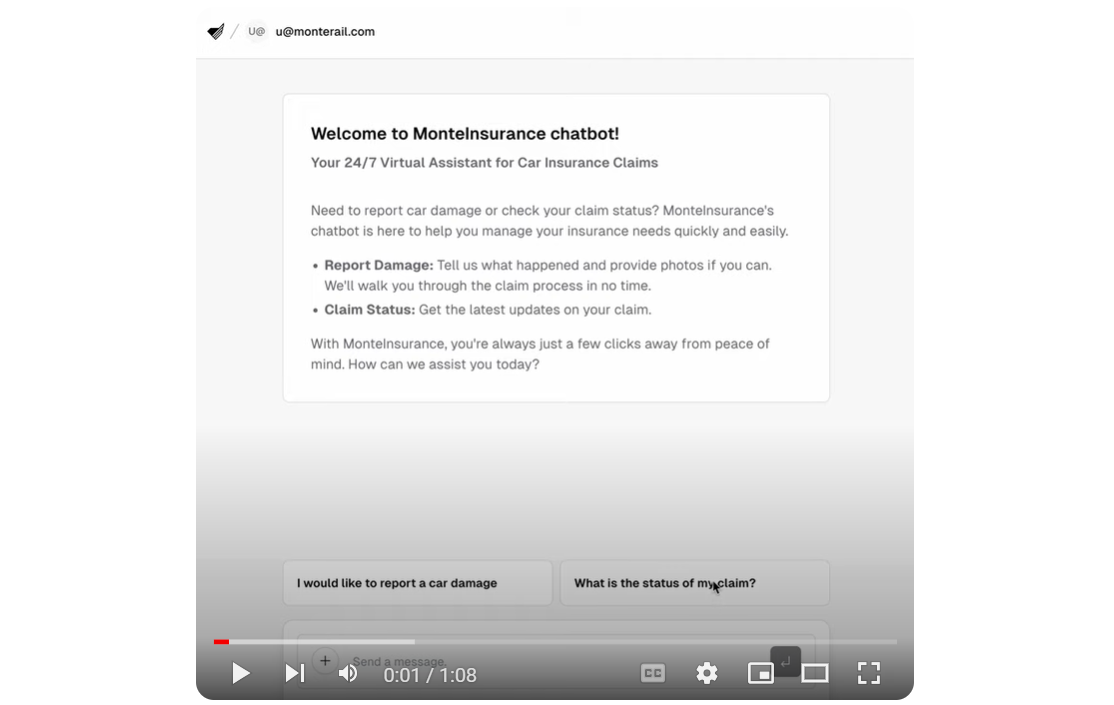

We wanted to test this approach and create a Proof of Concept (PoC) to showcase the capabilities of Vercel’s AI SDK with Generative UI. Our vision was to develop a chatbot for an imaginary insurance company. The objective was to simplify the complex process of submitting an insurance claim, making it more intuitive, and interactive. Our PoC drew significant inspiration from Vercel’s Next.js AI Chatbot demo, which is an excellent starting point for those interested in learning about their AI SDK and Generative UI.

:quality(90))

The chatbot serves two main purposes: reporting car damage and checking the status of submitted claims. These tasks can be completed through a simple conversation with the bot using natural language. To enhance the experience, we included interactive components that the LLM can utilize and display as it deems suitable for the given context. The features include:

Car Selector: Users interact with a custom React component to select their car from a list associated with their account when reporting a claim, rather than using a text prompt.

Parts Selector: A dynamic diagram allows users to visually select and specify damaged car parts, standardizing the submitted part names and aiding those unfamiliar with the exact terminology.

Claims List: Users can view a list of their current insurance claims and their statuses through an interactive component that provides real-time data.

Summary Display: The chatbot compiles the provided information into an interactive summary, enabling users to review, edit, and confirm the details of their report.

Technical implementation of the dynamic chatbot UI with Vercel's AI SDK

The app's behavior is mainly determined by the submitUserMessage() server action. This action takes user input as an argument and returns a result, which can be either streamed text or UI. It also updates and synchronizes the AI state, which contains the context and data communicated to the AI model, including system messages, function responses, and other relevant information. The action uses the streamUI helper function from the Vercel AI SDK (specifically in the ai/rsc package), to facilitate UI streaming of answers. It's important to note that this functionality relies on React Server Components, so it's only compatible with frameworks that support this concept (e.g., Next.js).

Here's a basic example that handles only text messages:

async function submitUserMessage(content: string) {

'use server'

const aiState = getMutableAIState<typeof AI>()

aiState.update({

...aiState.get(),

messages: [

...aiState.get().messages,

{

id: nanoid(),

role: 'user',

content

}

]

})

let textStream: undefined | ReturnType<typeof createStreamableValue<string>>

let textNode: undefined | React.ReactNode

const result = await streamUI({

model: openai('gpt-3.5-turbo'),

initial: <SpinnerMessage />,

system: "[...]", // System promp where we define the app job and desired behaviour

messages: [

...aiState.get().messages.map((message: any) => ({

role: message.role,

content: message.content,

name: message.name

}))

],

text: ({ content, done, delta }) => {

// Text streaming logic:

if (!textStream) {

textStream = createStreamableValue('')

textNode = <BotMessage content={textStream.value} />

}

if (done) {

textStream.done()

aiState.done({

...aiState.get(),

messages: [

...aiState.get().messages,

{

id: nanoid(),

role: 'assistant',

content

}

]

})

} else {

textStream.update(delta)

}

return textNode

},

})

return {

id: nanoid(),

display: result.value

}

}The function first updates the AI state with the new user message and then uses the streamUI helper to return the answer. This example only handles text messages, but it already streams a React node (<BotMessage content={textStream.value} />) instead of plain text.

Now, let's introduce interactivity to the chatbot, using the Car Selector feature as an example. Generative UI relies on function calls, which enable the execution of complex logic, such as fetching and processing external data before rendering a UI component. We define functions available to the model in the tools parameter of streamUI. Here's the code for the showCarSelector function definition:

tools: {

showCarSelector: {

description:

'Show user cars and UI for them to select a car. Use it when you want to ask the user to select a car.',

parameters: z.object({}),

generate: async function* () {

yield (

<BotCard>

<CarSelectorSkeleton />

</BotCard>

)

const session = await auth()

const cars = await getUserCars(session?.user?.id)

const toolCallId = nanoid()

aiState.done({

...aiState.get(),

messages: [

...aiState.get().messages,

{

id: nanoid(),

role: 'assistant',

content: [

{

type: 'tool-call',

toolName: 'showCarSelector',

toolCallId,

args: cars

}

]

},

{

id: nanoid(),

role: 'tool',

content: [

{

type: 'tool-result',

toolName: 'showCarSelector',

toolCallId,

result: cars

}

]

}

]

})

return (

<BotCard>

<CarSelector props={cars} />

</BotCard>

)

}

},

// ...other tools definitions

}First, we start by providing a function description to guide the model on when to use it. Next, we define the required parameters (in this case, there are none) and create a generate function, which contains all the logic. Note that this function is asynchronous, which allows for the initial rendering of a skeleton component, followed by fetching data from an external source, updating the AI’s state with a history of messages, and finally presenting the UI component that displays the car selector.

:quality(90))

Once the component is displayed, we need to capture user input from it. We can call submitUserMessage when a user selects one of the cars and describe the user's action by detailing the selected car, as shown below:

{cars.map(car => (

<Card

onClick={async () => {

if (!selectedCar) {

setSelectedCar(car)

const response = await submitUserMessage(

`[User selected car: ${JSON.stringify(car)}]`

)

setMessages(currentMessages => [...currentMessages, response])

}

}}

key={car.id}

>

{/* Car details */}

</Card>

))}Implementing other features follows a similar pattern, with each interactive component having its corresponding tool defined in the submitUserMessage() action.

You can view the final result of the app in this video, Generative UI integration in chatbots: the POC.

Navigating complexity in Generative UI integration

The integration of Generative UI into chatbot applications, powered by Vercel AI SDK, represents a significant leap forward in interactive app development. This approach simplifies the development process and enhances the user experience by providing fast and performant interactions through the use of React Server Components. The server handles all the logic and rendering, resulting in a seamless and efficient performance.

While Generative UI simplifies app development, it also introduces the complexity of prompt engineering. Achieving the precise rendering of UI components by the AI requires careful tuning, and at times, the outcomes can be unpredictable. The AI tends to be more reliable when responding to direct user requests, such as displaying specific data, rather than guiding the interaction, such as helping a user through a claim submission. These issues may be resolved by employing a model fine-tuned for this specific context.

In conclusion, Generative UI stands ready to offer innovative solutions for user engagement, promising adaptable and swift interfaces. As the technology evolves, it is expected to surpass its current limitations and unlock new possibilities.

:quality(90))

:quality(90))